Introduction: Advancing Equity in Oncology Patient Engagement

The oncology landscape is witnessing a rapid transformation driven by technology, personalized medicine, and artificial intelligence (AI). Yet, amid these advances, a significant challenge persists, ensuring that patient outreach efforts are equitable and free from algorithmic biases. AI-powered tools, when used indiscriminately, can unintentionally reinforce disparities, particularly affecting underrepresented populations, minority groups, and socioeconomically disadvantaged patients.

Fair AI in oncology outreach is about more than technology, it’s about trust, dignity, and access. Pharma marketers today must not only focus on precision targeting but also ensure that their campaigns are ethically designed, culturally sensitive, and inclusive. This article explores the strategies, technologies, and ethical frameworks needed to prevent bias in oncology patient outreach, while also presenting actionable steps for pharma companies to build fair, trustworthy, and patient-first campaigns.

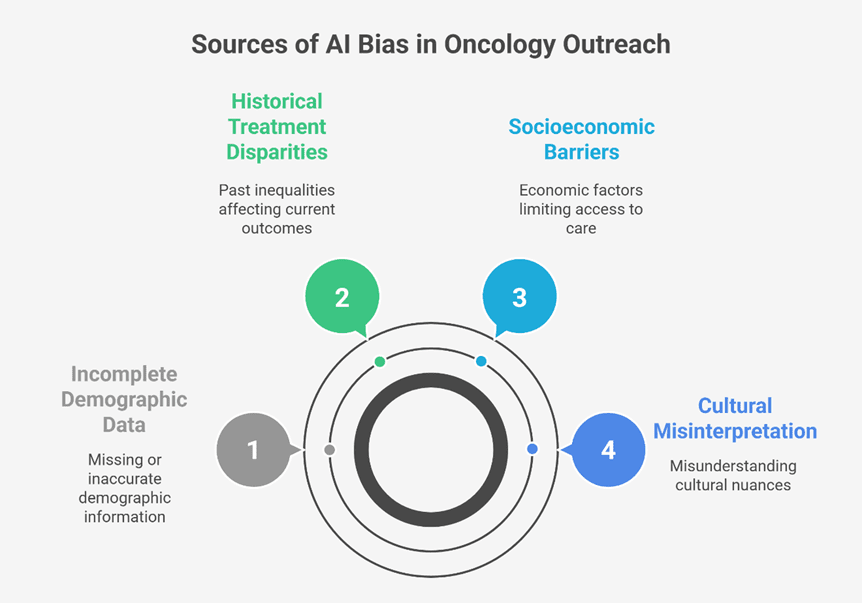

1. Understanding Bias in AI-Driven Oncology Outreach

AI systems learn from historical data. Unfortunately, healthcare data often reflects systemic inequalities, ranging from underdiagnosed diseases in certain communities to socioeconomic barriers in accessing treatment. Without careful calibration, AI algorithms can perpetuate these biases by:

- Prioritizing outreach to urban populations with better internet access.

- Ignoring minority groups due to limited data representation.

- Reinforcing stereotypes based on age, gender, or ethnicity.

Recognizing the root causes of bias is essential to ensuring AI’s ethical application in oncology outreach. Pharma marketers must collaborate with clinicians, data scientists, and patient advocacy groups to audit data sources, refine models, and actively identify bias patterns before scaling campaigns.

2. Why Fairness Matters in Oncology Marketing

Bias in oncology outreach has direct health consequences. Patients excluded from awareness campaigns are less likely to seek timely screenings, participate in trials, or access treatment support networks.

Key reasons fairness matters:

- Improved Health Outcomes: Equitable outreach increases screening rates and early diagnosis in underserved communities.

- Enhanced Trust: Patients are more likely to engage with campaigns that acknowledge their unique needs and cultural nuances.

- Regulatory Compliance: Ethical marketing practices ensure adherence to data privacy laws, anti-discrimination policies, and healthcare standards.

- Long-Term Brand Reputation: Pharma companies committed to fairness gain goodwill and patient loyalty.

3. The Role of AI in Addressing Bias

AI offers powerful tools to correct bias when applied thoughtfully:

AI Techniques to Enhance Fairness:

- Data Augmentation: Expanding datasets to include underrepresented patient demographics.

- Bias Detection Algorithms: Identifying skewed patterns in campaign reach or engagement metrics.

- Context-Aware Personalization: Tailoring content to match cultural, linguistic, and behavioral profiles.

- Feedback Loops: Incorporating patient-reported experiences to continuously refine AI-driven outreach.

Ethical Guidelines for AI Usage:

- Avoid reinforcing stereotypes in messaging.

- Incorporate patient consent at every stage of data usage.

- Ensure transparency in how algorithms determine outreach priorities.

4. Designing Inclusive Oncology Campaigns

A fair outreach campaign balances personalization with inclusivity. Below are steps to ensure campaigns are bias-free:

Step 1 – Diverse Data Sources

Use data from varied regions, languages, and socioeconomic contexts. For instance:

- Public health records.

- Community health worker reports.

- Patient advocacy groups’ feedback.

Step 2 – Bias Testing Before Launch

Before a campaign is released:

- Conduct A/B testing across demographic groups.

- Analyze differences in engagement rates.

- Adjust algorithms to mitigate underrepresentation.

Step 3 – Ethical Review Panels

Involve cross-functional teams, ethicists, patient advocates, and oncologists, to review campaign scripts, visuals, and AI models for fairness.

5. Case Study: AI Correcting Outreach Disparities

A leading pharma brand launched an oral cancer awareness campaign targeting urban populations. Post-launch analysis revealed that rural regions with higher tobacco usage saw less engagement. The campaign team implemented AI-driven localization strategies by:

- Incorporating local dialects and cultural references.

- Partnering with rural health workers to collect patient feedback.

- Updating targeting algorithms to prioritize underserved districts.

This intervention increased rural engagement by 28% within six months.

6. Fair AI and Digital Health Tools

Digital health platforms, mobile apps, and chatbots are increasingly used for oncology patient outreach. Ensuring fairness in these tools requires:

- Avoiding language that stigmatizes certain health conditions.

- Offering multi-language support to reduce literacy barriers.

- Providing equal recommendations for screening irrespective of insurance status or geography.

7. Multilingual Outreach to Reduce Bias

Language is one of the most significant barriers in oncology awareness. Patients from diverse backgrounds often miss critical information due to language gaps. Fair AI algorithms must prioritize:

- Automated translation tools tailored to medical terminology.

- Voice-based interfaces that deliver content in local dialects.

- In-app glossaries explaining symptoms, treatment steps, and side effects.

Localized content not only improves comprehension but also builds trust by respecting patient identity.

8. Ethical Targeting Algorithms

Ethical targeting involves creating algorithms that actively counter exclusionary patterns. For example:

- Ensuring AI recommends screening campaigns equally to low-income areas.

- Adjusting content frequency so that minority groups are not overwhelmed or ignored.

- Incorporating feedback loops that flag content perceived as insensitive.

By embedding fairness metrics into algorithm design, pharma companies can promote equitable outreach at scale.

9. Collaborations with Community Stakeholders

Community partnerships are essential in identifying blind spots in outreach campaigns. Fair AI initiatives benefit from:

- Working with NGOs specializing in cancer awareness in marginalized areas.

- Co-developing content with survivor groups to reflect real-life concerns.

- Engaging local leaders to promote screenings and combat stigma.

Collaborations ensure campaigns resonate at the grassroots level and avoid biases inherent in top-down approaches.

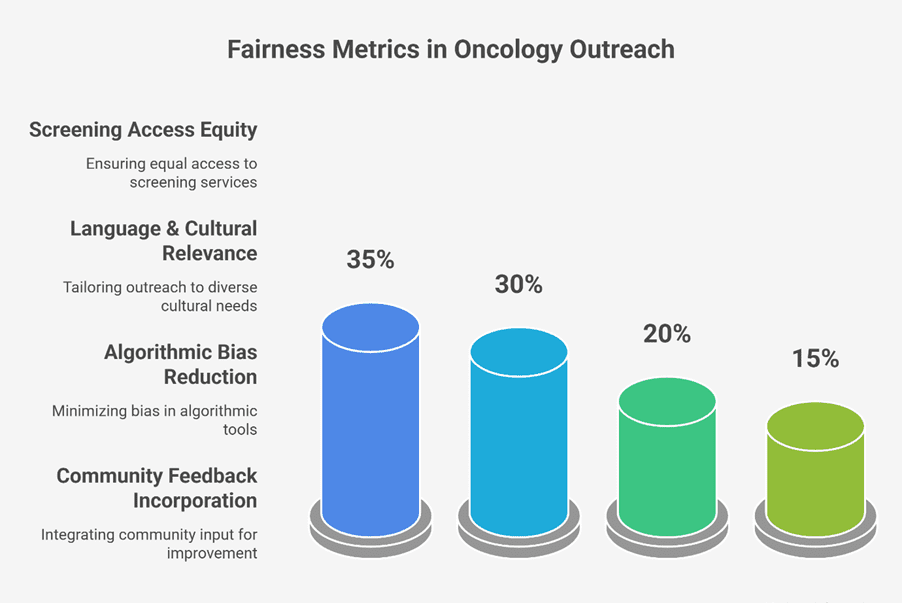

10. Monitoring and Reporting Fairness Metrics

Fair AI requires ongoing evaluation. Pharma marketers should measure:

- Outreach distribution by geography, income, and ethnicity.

- Screening uptake among underrepresented groups.

- Sentiment analysis to detect adverse reactions to campaign messaging.

Fairness metrics must be treated as key performance indicators, not just ethical checkboxes.

11. Addressing Unintended Consequences

Even well-intentioned algorithms can cause harm. Pharma companies must remain vigilant for:

- Over-targeting vulnerable populations, leading to campaign fatigue.

- Under-targeting due to privacy constraints, causing exclusion.

- Mistrust arising from overly generic recommendations.

A transparent approach, where patients understand how their data is used, helps mitigate these risks.

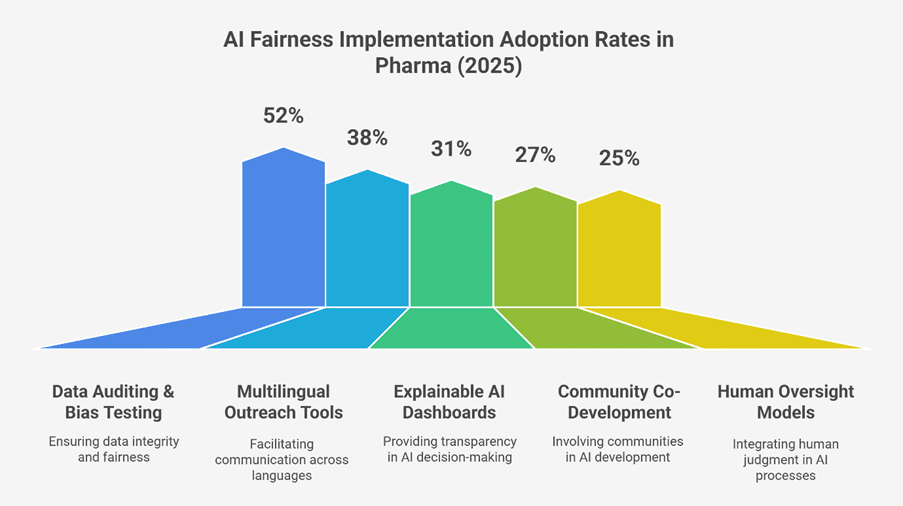

12. The Importance of Explainability in AI Models

AI explainability refers to making algorithmic decisions understandable to stakeholders. This is crucial in oncology outreach because:

- Patients are more likely to trust recommendations if they know why certain screenings are suggested.

- Healthcare providers need clarity on how AI prioritizes patient lists.

- Regulators require transparency to assess algorithm fairness.

Explainable AI frameworks should include dashboards that highlight data sources, reasoning paths, and adjustments made for fairness.

13. Predictive Analytics with Ethical Guardrails

Predictive analytics helps identify patients at higher risk, but fairness must guide these models:

- Avoid race-based risk predictions unless medically validated.

- Use socioeconomic indicators without reinforcing stigma.

- Balance sensitivity and specificity to prevent false positives that disproportionately affect certain groups.

For example, a risk model prioritizing early breast cancer screening should incorporate family history and lifestyle data without biasing against minority groups lacking formal medical records.

14. Integrating Human Oversight

AI is powerful but should not replace human judgment. Oncology outreach benefits from hybrid models where:

- AI recommends actions but doctors validate them before implementation.

- Community workers review patient feedback and contextual nuances.

- Patient advisory boards co-create outreach strategies with marketers.

Human oversight ensures that campaigns remain compassionate, ethical, and aligned with patient realities.

15. Privacy and Consent in Fair AI Campaigns

Fairness extends beyond algorithm design to how patient data is collected and used. Ethical oncology outreach includes:

- Obtaining explicit consent before using personal health information.

- Ensuring anonymization where possible to prevent misuse.

- Offering opt-out options in campaign tracking tools.

Respecting privacy not only complies with regulations but also strengthens patient trust, especially in communities historically skeptical of healthcare systems.

16. Addressing Health Literacy Disparities

AI-driven campaigns must account for differences in health literacy. Bias can arise when algorithms assume baseline medical knowledge that not all patients possess.

To counter this:

- Use simple, jargon-free language in automated messages.

- Provide visual aids that explain complex concepts.

- Offer voice guidance for patients who cannot read campaign material.

Health literacy is a crucial fairness metric that ensures no patient is left behind.

17. Fairness in AI-Driven Clinical Trials Recruitment

Pharma companies increasingly use AI to identify eligible patients for clinical trials. Bias in recruitment can exclude diverse populations, resulting in non-representative research.

Fair recruitment models include:

- Adjusting eligibility criteria to account for under-documented health histories.

- Proactively including minority populations through targeted outreach.

- Tracking demographic representation continuously and rebalancing efforts when needed.

A more representative trial cohort ensures that treatments benefit all segments of the population.

18. AI and Mental Health Support in Oncology Outreach

Cancer patients often face anxiety, depression, and emotional isolation. Bias in mental health support can occur if AI tools fail to recommend resources that align with cultural attitudes or stigma.

Fair mental health interventions include:

- Offering counseling support in preferred languages.

- Addressing cultural beliefs around cancer treatment.

- Promoting peer groups that reflect shared experiences.

AI-driven mental health support must be tailored with empathy and cultural sensitivity to avoid alienating vulnerable patients.

19. Future-Proofing Fair AI in Oncology Outreach

As AI technologies evolve, fairness frameworks must adapt. Pharma companies should:

- Regularly update datasets to include new demographic trends.

- Train algorithms with diverse teams, including ethicists and patient advocates.

- Implement AI audits as part of routine campaign evaluation.

- Educate internal teams about unintended bias and ethical responsibilities.

Future-proofing fairness ensures that AI remains a tool for empowerment, not exclusion.

20. Addressing Algorithmic Blind Spots in Oncology Outreach

Algorithmic blind spots occur when AI systems overlook or underperform in identifying the needs of certain patient groups due to incomplete data, assumptions built into models, or socio-behavioral nuances that are difficult to capture. These blind spots can unintentionally exclude populations that are already marginalized in healthcare access.

Common Causes of Algorithmic Blind Spots:

- Historical underdiagnosis: Data from healthcare systems often reflect patients who already had access to care, leaving out those without prior interaction.

- Limited data from minority groups: Insufficient representation in datasets can skew AI predictions and outreach recommendations.

- Cultural and behavioral differences: Variations in how symptoms are described, perceived, or reported are often not adequately factored into algorithms.

- Technological limitations: Older devices or poor internet connectivity can prevent data from certain communities from being included in AI models.

Strategies to Identify and Mitigate Blind Spots:

- Data Enrichment

- Partner with local NGOs and community health programs to collect additional data points.

- Include patient-reported outcomes (PROs) and health diaries in structured formats to enrich datasets.

- Contextual Model Training

- Integrate region-specific epidemiological data and lifestyle factors such as diet, occupational risks, and cultural taboos.

- Implement cross-validation models that test AI recommendations against diverse demographic segments.

- Adaptive Learning Systems

- Build feedback loops where AI systems continuously learn from new inputs.

- Create adaptive thresholds where outreach triggers are modified based on changing patterns of disease or patient behavior.

- Human-in-the-loop Systems

- Use AI to propose outreach strategies but involve healthcare professionals to validate recommendations.

- Include patient advocacy groups in review boards to ensure fairness considerations are aligned with real-world patient experiences.

By systematically addressing blind spots, pharma marketers can create inclusive AI models that better reflect the lived experiences of all patients, especially those from underserved communities.

Conclusion

Fair AI in oncology patient outreach is no longer optional, it is a moral imperative and a business necessity. By embedding equity, transparency, and empathy into AI-driven campaigns, pharma marketers can ensure that life-saving information reaches every patient, irrespective of geography, language, or socioeconomic status.

The road ahead is one where technology serves humanity, rather than amplifying existing disparities. Pharma companies that commit to fairness will not only enhance health outcomes but will also build lasting trust, credibility, and patient-centered relationships.

The future of oncology marketing belongs to those who balance data intelligence with human dignity, those who measure success not by reach alone, but by the lives they touch fairly and compassionately.

The Oncodoc team is a group of passionate healthcare and marketing professionals dedicated to delivering accurate, engaging, and impactful content. With expertise across medical research, digital strategy, and clinical communication, the team focuses on empowering healthcare professionals and patients alike. Through evidence-based insights and innovative storytelling, Hidoc aims to bridge the gap between medicine and digital engagement, promoting wellness and informed decision-making.