Abstract

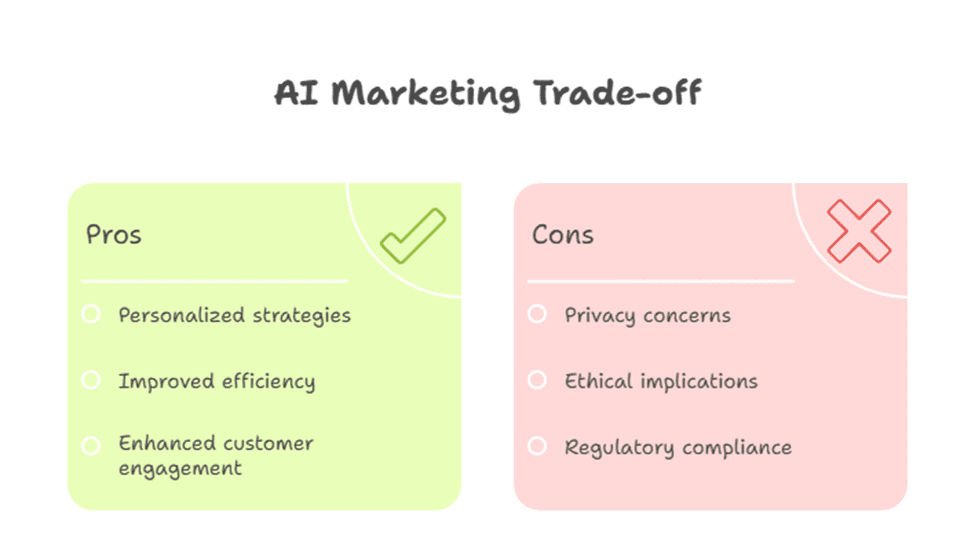

The integration of Artificial Intelligence (AI) into oncology marketing in the US promises unprecedented levels of personalization, efficiency, and insight, revolutionizing how pharmaceutical companies engage with healthcare professionals (HCPs) and patients. From predictive analytics guiding targeted outreach to generative AI crafting tailored content, the potential to enhance scientific exchange and patient support is immense. However, this transformative power comes with significant ethical responsibilities. The very capabilities that make AI so effective—its ability to process vast amounts of data, identify subtle patterns, and personalize communication—also introduce complex ethical dilemmas, particularly in a high-stakes, emotionally charged field like oncology.

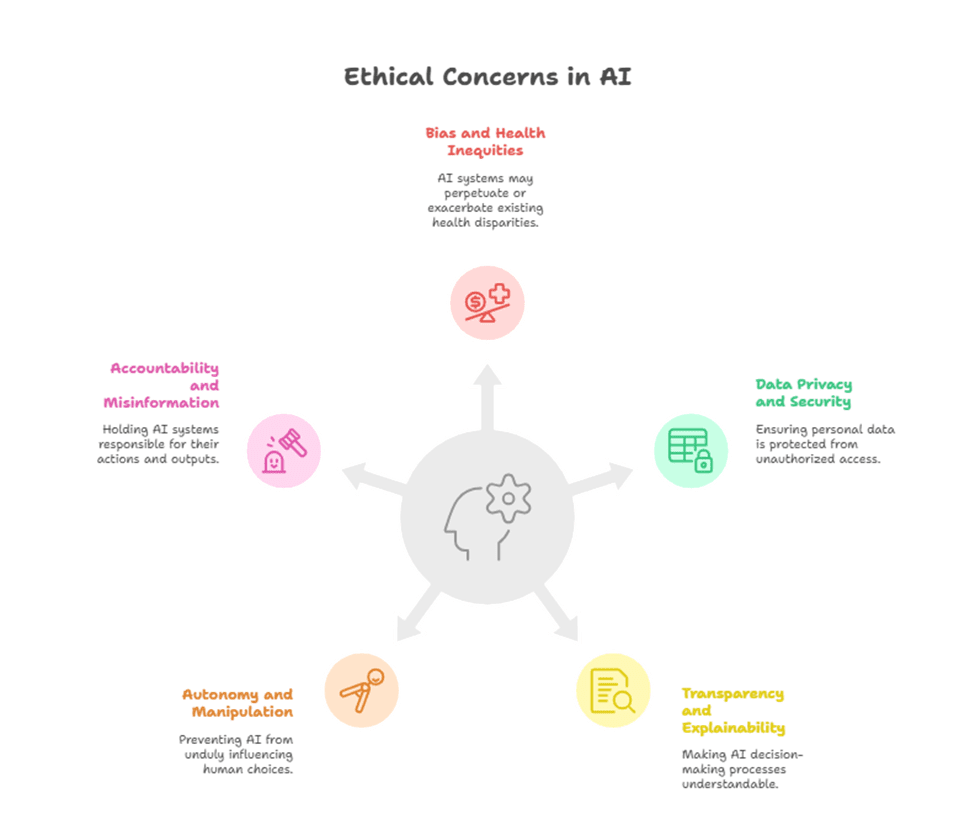

This article critically examines the emerging ethical challenges in AI-driven oncology marketing, assessing whether current practices are adequately addressing these concerns or if we risk “failing the ethics scorecard.” We will delve into five critical areas of ethical concern: 1) Bias and Health Inequities; 2) Data Privacy and Security; 3) Transparency and Explainability; 4) Autonomy and Manipulation; and 5) Accountability and Misinformation.

For each ethical challenge, this article will explore its manifestations within the US oncology market, provide illustrative scenarios, and discuss the implications for both pharma marketers and the oncology community. The discussion is designed to be highly educational for pharma managers, urging a proactive approach to ethical AI governance. We will propose a framework for building an ethical AI strategy, incorporating data-driven insights and hypothetical models to emphasize that technological advancement without robust ethical safeguards not only jeopardizes trust but can also exacerbate existing disparities and undermine the very mission of improving cancer care.

Introduction: The Dual-Edged Sword of AI in Oncology Marketing

The promise of Artificial Intelligence in oncology marketing is compelling. Imagine a world where every oncologist receives only the most relevant, timely, and impactful scientific information tailored precisely to their practice, patient population, and learning preferences. A world where patients are proactively supported through their cancer journey with personalized adherence reminders, side-effect management tips, and empathetic communication, all delivered at the perfect moment. This is the vision that AI-driven marketing offers, transforming the traditionally broad and often inefficient pharma-HCP and pharma-patient interactions into highly intelligent, “segment-of-one” experiences.

The US oncology market, with its rapid innovation, complex treatment paradigms, and diverse patient demographics, stands to benefit immensely from these advancements. However, as AI tools become more sophisticated and deeply integrated into commercial strategies, a critical question arises: Are we adequately addressing the profound ethical implications of these powerful technologies? The same algorithms that can personalize engagement can also inadvertently propagate bias. The data that fuels insights can also be misused or compromised. The persuasive power that drives adherence can, if unchecked, border on manipulation.

This article aims to critically evaluate the ethical scorecard of AI in oncology marketing. It is a call to action for pharma managers to move beyond a reactive stance on ethics and to proactively embed robust ethical frameworks into every stage of their AI strategy. Failing to do so risks not only regulatory backlash and reputational damage but, more importantly, a profound erosion of trust among oncologists and patients – a trust that is foundational to improving cancer care.

1. Bias and Health Inequities

The Problem

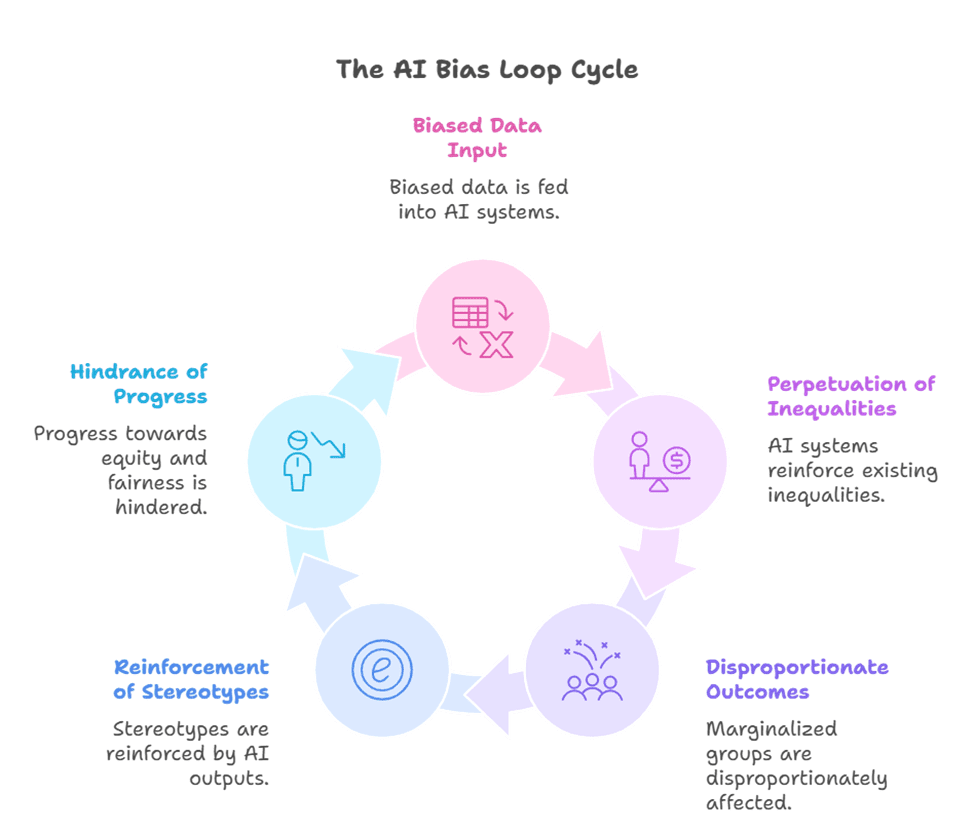

AI models learn from the data they are fed. If that data reflects existing societal biases or historical health inequities, the AI will inevitably perpetuate and even amplify those biases. In oncology, where significant disparities already exist in access to care, clinical trial representation, and treatment outcomes across racial, ethnic, and socioeconomic lines, biased AI could exacerbate these problems.

Ethical Manifestations in Oncology Marketing

- Targeting Disparities: If an AI model is trained on historical prescribing data that shows lower utilization of a novel therapy in certain minority populations (due to systemic access issues, not clinical inappropriateness), the AI might learn to de-prioritize targeting HCPs who serve those populations, thereby perpetuating the disparity.

- Content Relevance: AI-generated personalized content might inadvertently focus on conditions more prevalent in historically advantaged groups, leading to a lack of relevant information for underserved populations or underrepresented cancer types.

- Clinical Trial Recruitment: AI tools designed to identify potential clinical trial participants might inadvertently exclude diverse populations if the training data for patient identification is skewed towards specific demographics, hindering efforts to improve trial diversity.

The Ethics Scorecard: A Potential Fail

Without deliberate and continuous auditing for bias, AI-driven targeting and personalization can inadvertently widen health equity gaps. Pharma’s role in addressing health disparities in cancer care becomes even more critical with AI.

2. Data Privacy and Security

The Problem

AI’s power in marketing stems from its ability to analyze vast amounts of data, including sensitive health information (PHI) and granular HCP behavioral data. The collection, storage, and processing of this data, even when anonymized or aggregated, pose significant privacy and security risks. Breaches or misuse can have devastating consequences for patient trust and regulatory compliance (e.g., HIPAA).

Ethical Manifestations in Oncology Marketing

- Granular HCP Profiling: AI systems create incredibly detailed “digital phenotypes” of oncologists, tracking their every click, view, and interaction. While intended for personalization, this level of surveillance raises questions about professional privacy and the potential for perceived manipulation.

- Patient Data Linkage (Even Anonymized): While direct patient PHI is typically not used in marketing, aggregated patient data (e.g., from claims, EHRs, or patient support programs) is often used to inform marketing strategy. If not rigorously anonymized and secured, or if re-identification is possible, this poses a major risk.

- Third-Party Data Sharing: Pharma companies often rely on third-party data providers for market insights. The provenance, consent, and security practices of these external data sources must be transparent and robust.

The Ethics Scorecard: A Critical Challenge

The allure of rich data insights must be balanced with an unyielding commitment to privacy and security. A single data breach could shatter decades of trust.

3. Transparency and Explainability (XAI)

The Problem

Many advanced AI models operate as “black boxes”—they deliver powerful predictions or content, but the underlying logic or reasoning is opaque, even to their developers. In a field like oncology, where critical decisions impact patient lives, this lack of transparency is unacceptable.

Ethical Manifestations in Oncology Marketing

- “Why did I get this?” Syndrome: An oncologist receiving a highly personalized message might wonder how pharma knew their specific interest or patient population. If the AI’s targeting logic is a black box, it can erode trust and feel intrusive.

- AI-Generated Content Credibility: While generative AI can draft compelling content, if the source of information or the editing process is not transparent, its scientific credibility, especially for oncologists, will be questioned. Oncologists need to know content is medically accurate, not just algorithmically generated.

- Bias in Recommendations: If an AI recommends a “next best action” for a sales rep based on an opaque algorithm, and that recommendation inadvertently steers them away from a certain demographic, the lack of explainability makes it impossible to detect and correct potential bias.

The Ethics Scorecard: Often Overlooked

The drive for efficiency and personalization can inadvertently create opaque systems. For oncologists, “trust me, the AI knows” is not a viable ethical position.

4. Autonomy and Manipulation

The Problem

AI’s ability to hyper-personalize and predict behavior offers unprecedented persuasive power. While the intent is to educate and support, there’s a fine line between effective persuasion and unethical manipulation, particularly when targeting vulnerable patient populations or influencing medical decisions.

Ethical Manifestations in Oncology Marketing

- “Nudge” Ethics: AI-powered “nudges” (e.g., personalized adherence reminders, prompts to engage with specific content) can be beneficial. However, if these nudges become overly frequent, emotionally charged, or designed to bypass rational decision-making, they can erode patient autonomy.

- Targeting Vulnerable Patients: Using AI to identify and target patients experiencing significant emotional distress (e.g., recently diagnosed with aggressive cancer) with overly persuasive or emotionally manipulative content, even if well-intentioned, crosses an ethical boundary.

- Influencing Prescribing Habits: If AI-driven engagement subtly steers oncologists towards a specific (and perhaps more expensive) therapy through relentless, hyper-personalized messaging, without adequately presenting alternatives or potential downsides, it can be seen as undermining clinical independence.

The Ethics Scorecard: A Slippery Slope

The power of personalization must be wielded with extreme care, always prioritizing patient and HCP autonomy over commercial gain.

5. Accountability and Misinformation

The Problem

When an AI system makes an error, generates misleading content, or provides an incorrect recommendation, who is accountable? In the context of medical information, errors can have severe consequences. The ease with which generative AI can create content also increases the risk of spreading misinformation if not rigorously fact-checked.

Ethical Manifestations in Oncology Marketing

- “Hallucinations” in Generative AI: Generative AI can sometimes produce plausible-sounding but entirely false or misleading information (“hallucinations”). If unvetted AI-generated content makes its way into marketing materials for oncologists or patients, it could lead to incorrect medical decisions.

- Algorithm-Driven Misinformation Spread: If an AI model is inadvertently trained on or influenced by unverified or biased sources, it could propagate misinformation through its personalized content recommendations.

- Lack of Clear Responsibility: If an AI-driven system leads to a negative outcome (e.g., a patient discontinuing therapy due to misleading information), establishing clear accountability (e.g., the AI developer, the pharma company, the content creator) can be challenging.

The Ethics Scorecard: A Regulatory Flashpoint

Clear lines of accountability and robust human oversight are essential safeguards against the risks of AI-driven misinformation.

Conclusion: Building an Ethical AI Framework for Oncology Marketing

The integration of AI into oncology marketing is not merely a technological choice; it is a profound ethical one. The potential to personalize, optimize, and streamline engagement offers unprecedented benefits for scientific exchange and patient support in the US oncology market. However, without a deliberate, proactive, and robust ethical framework, pharma risks failing its most important scorecard: the trust of oncologists and the well-being of patients.

To succeed in this new AI era, pharma managers must move beyond a reactive compliance mindset to adopt a proactive “ethics-by-design” approach. This involves:

- Prioritizing Health Equity: Actively audit AI models for bias, ensure diverse training data, and design solutions to bridge, not widen, health disparities.

- Unwavering Data Governance: Implement best-in-class data privacy and security protocols, transparently communicate data usage, and obtain informed consent.

- Embracing Explainable AI: Insist on transparency in AI models, allowing oncologists and internal teams to understand the logic behind recommendations and personalization.

- Upholding Autonomy: Design AI tools that empower, educate, and support, rather than manipulate or coerce, always respecting the autonomy of HCPs and patients.

- Establishing Clear Accountability: Implement rigorous human oversight for all AI-generated content and recommendations, ensuring clear lines of responsibility for any errors or harms.

AI in oncology marketing holds the power to truly transform cancer care journeys. But this transformation must be guided by an unshakeable commitment to ethical principles. By proactively addressing these challenges, pharma can harness AI’s full potential, not just for commercial success, but for the ultimate benefit of patients battling cancer. The ethics scorecard demands nothing less.

The Oncodoc team is a group of passionate healthcare and marketing professionals dedicated to delivering accurate, engaging, and impactful content. With expertise across medical research, digital strategy, and clinical communication, the team focuses on empowering healthcare professionals and patients alike. Through evidence-based insights and innovative storytelling, Hidoc aims to bridge the gap between medicine and digital engagement, promoting wellness and informed decision-making.